I’m thrilled to be part of a new NSF-funded IMAX and digital 3D documentary film project that will introduce audiences to the major scientific instruments being used to explore the origins of the universe. Chief among these are the Large Hadron Collider at CERN and a new generation of supercomputers.

I’m thrilled to be part of a new NSF-funded IMAX and digital 3D documentary film project that will introduce audiences to the major scientific instruments being used to explore the origins of the universe. Chief among these are the Large Hadron Collider at CERN and a new generation of supercomputers.

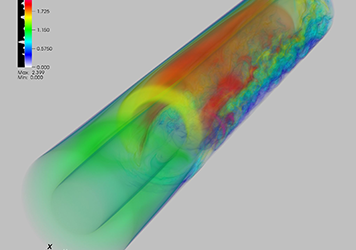

My specific contribution is advisory and relates to the role of supercomputing in this scientific enterprise. Systems like Mira accelerate discoveries in the cosmology arena through large-scale scientific simulation and visualization of enormously complex physical phenomena. (Both simulation and visualization will be featured prominently in the film.) Supercomputers were recently used to generate the largest cosmology simulation ever, which will help the scientific community to test theories against observational data, such as the next-generation of sky surveys preparing to go online.

Filming will take place during 2013 and 2014 and will result in a 2D/3D giant screen film, a dome planetarium film, museum exhibits and other educational materials. It’s a great team of investigators that include media communications guru Mark Kresser, UC Davis physics professor Manuel Calderon de la Barca, Franklin Institute’s Dale McCreedy, and IMAX film director Stephen Low.

A particularly interesting aspect of this outreach project is that it also supports a study of middle school girls’ interest and engagement in the topic. (Middle school girls’ interest in science and math tends to plummet around this time due to several social factors.) Films like these are high-quality outreach projects that present complex scientific research to the public in an accessible and entertaining way. It’s also an excellent opportunity to develop insights into how to develop STEM content for an especially vulnerable group of learners.